Week 5 - Gesture Recognition with TinyML

For this week's lab, we used collected accelerometer data from the Xiao nRF52840 Sense and trained a machine learning (ML) model to detect two gestures: punch or flex.

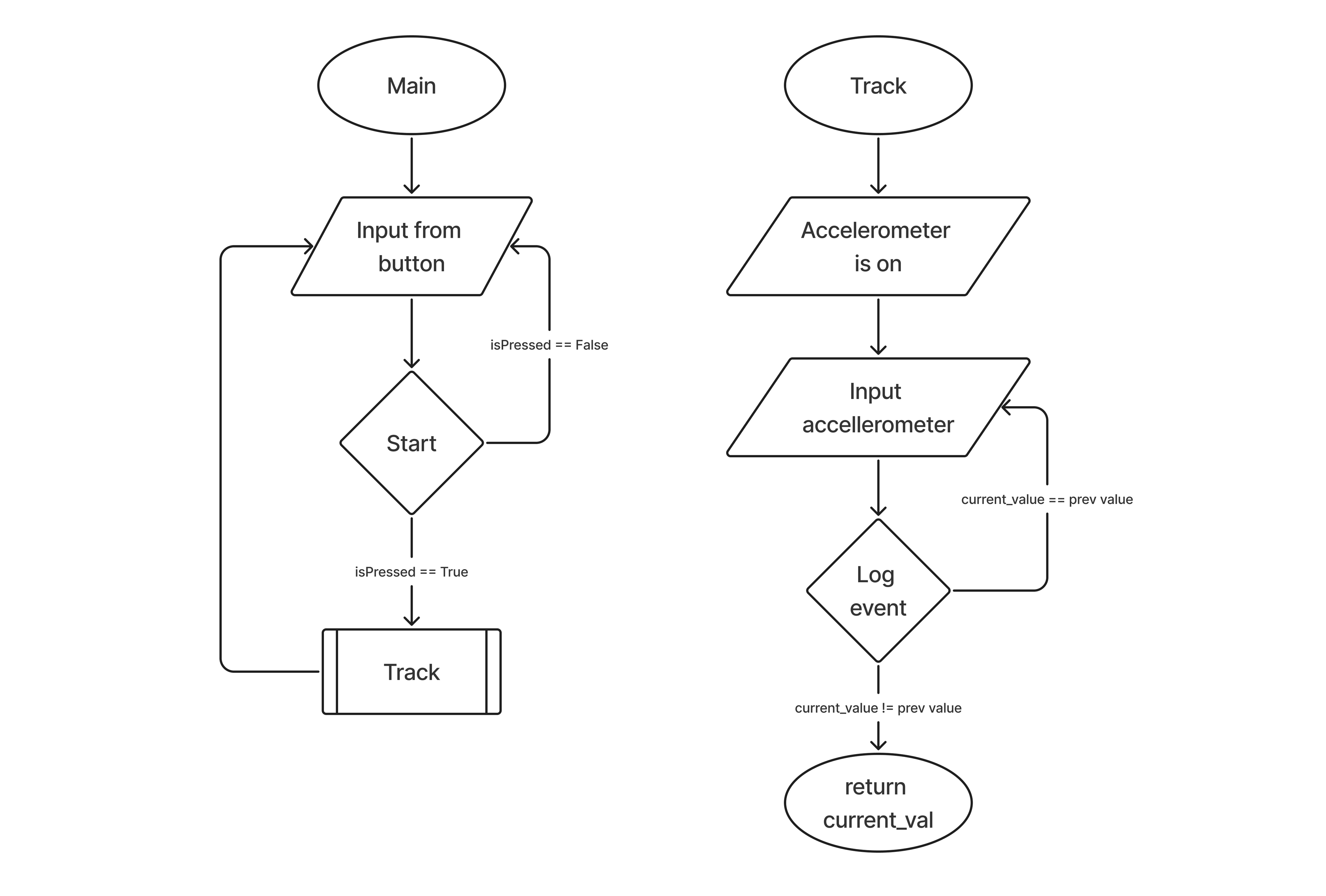

Flowchart

Before starting the lab, we created a flowchart to visualize the elements of a simple activity tracking system. The flowchart below can also be viewed here.

Data collection

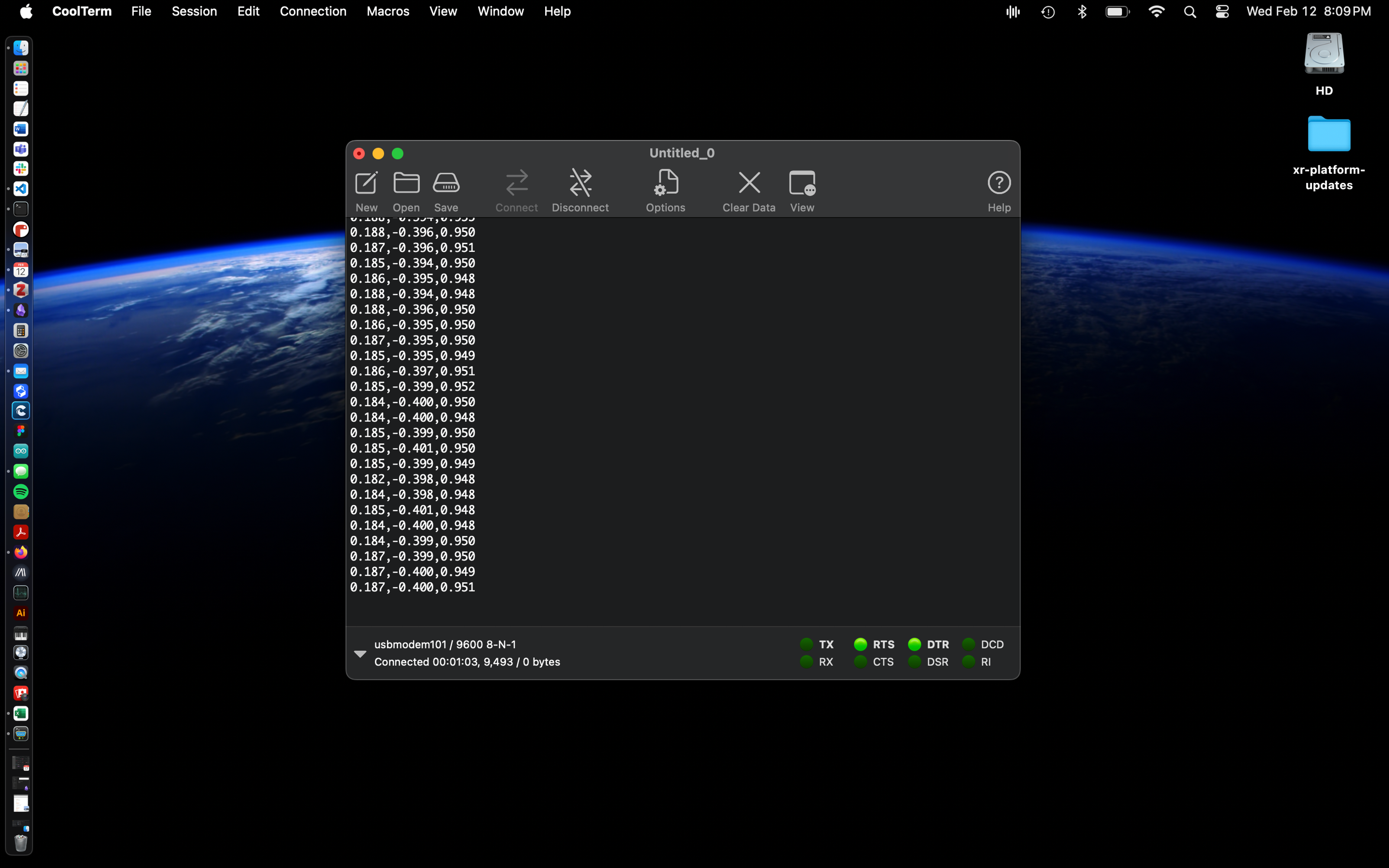

We first flashed a sketch to the board to access the accelerometer and print the XYZ values to the serial monitor. We then used CoolTerm to capture the data from the serial monitor and write it to CSV files (click here to download the datasets).

Capturing punch gesture data:

Capturing flex gesture data:

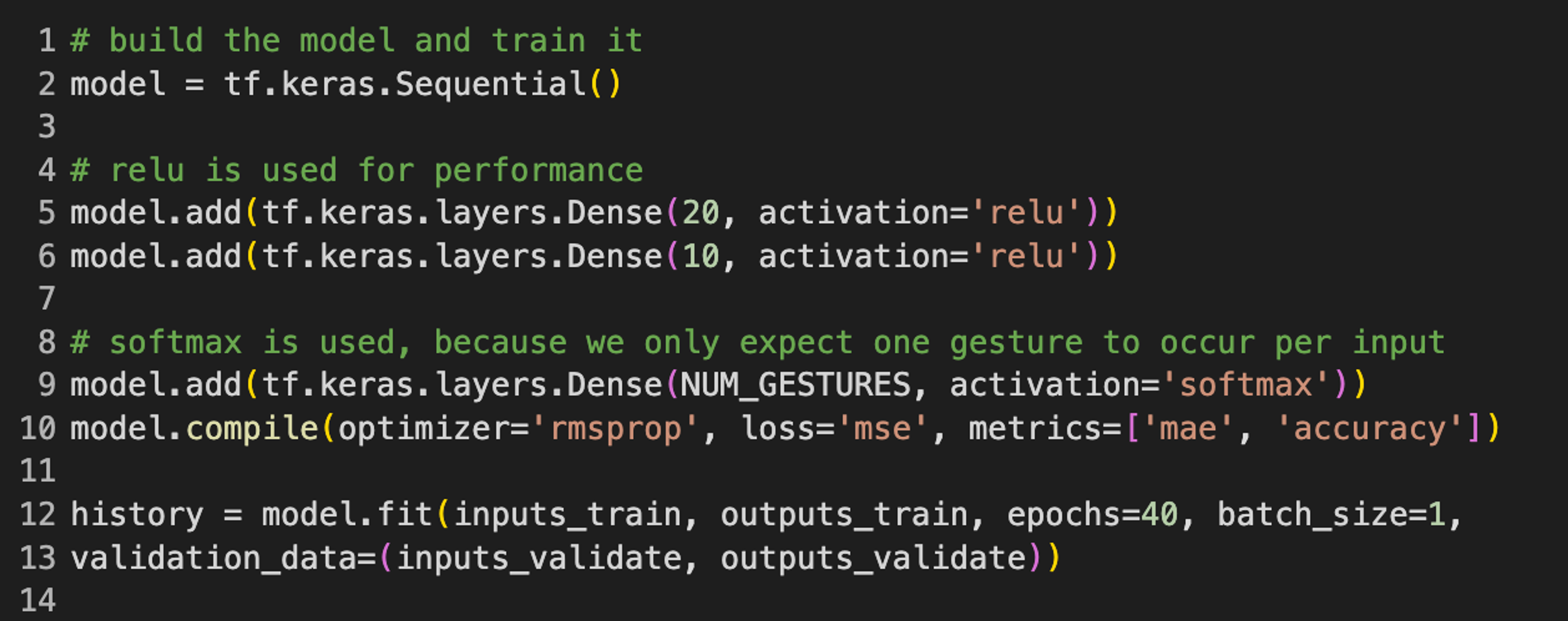

ML Model Training

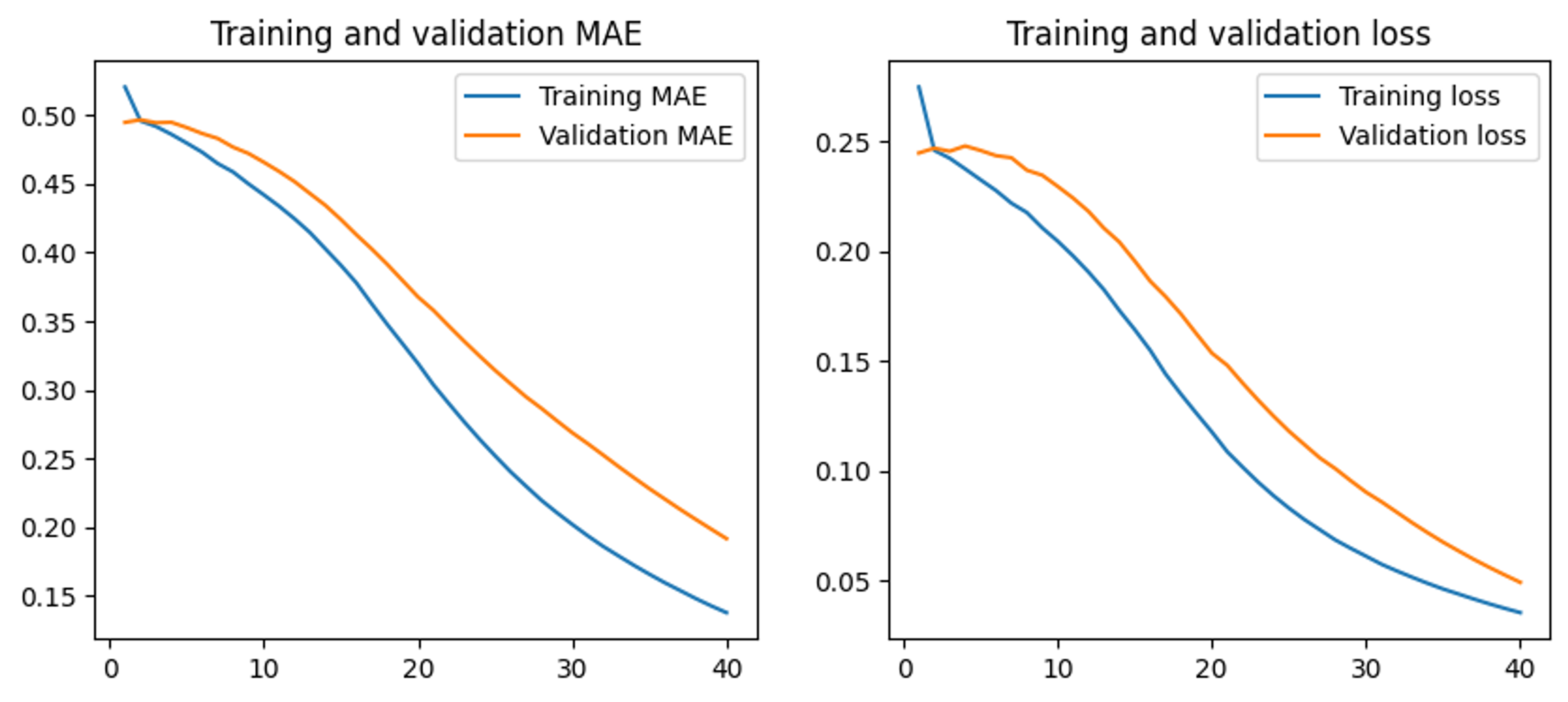

Next, we trained simple ML model with TensorFlow that outputs predictions for each gesture. We achieved low mean absolute error (MAE) and loss scores by training for 40 epochs and using the other hyperparameters shown below. You can view the full Google Colab notebook here.

To run the trained model on a microcontroller, we converted the model to TensoFlow Lite and exported the converted model to a byte array saved in a file named model.h. We then replaced the model.h file in the IMU_Classifier example sketch from the Seeed_Arduino_LSM6DS3 library and flashed the microcontroller again.

Model Testing

Our model was able to distinguish between the two gestures fairly well, although it seems sensitive to the rotation of the hand. So if the hand holding the microcontroller is rotated slightly during a flex gesture, it will tend to predict punch. Future work could involve fine-tuning the model to better distinguish between the different gestures.

Testing the model's ability to predict the gestures: