Week 7 - Activity recognition

For this week's lab, we collected data for five activities and trained a machine learning (ML) model for on-device activity recognition. The activities were ascending stairs, descending stairs, walking, standing, and sitting.

Data collection

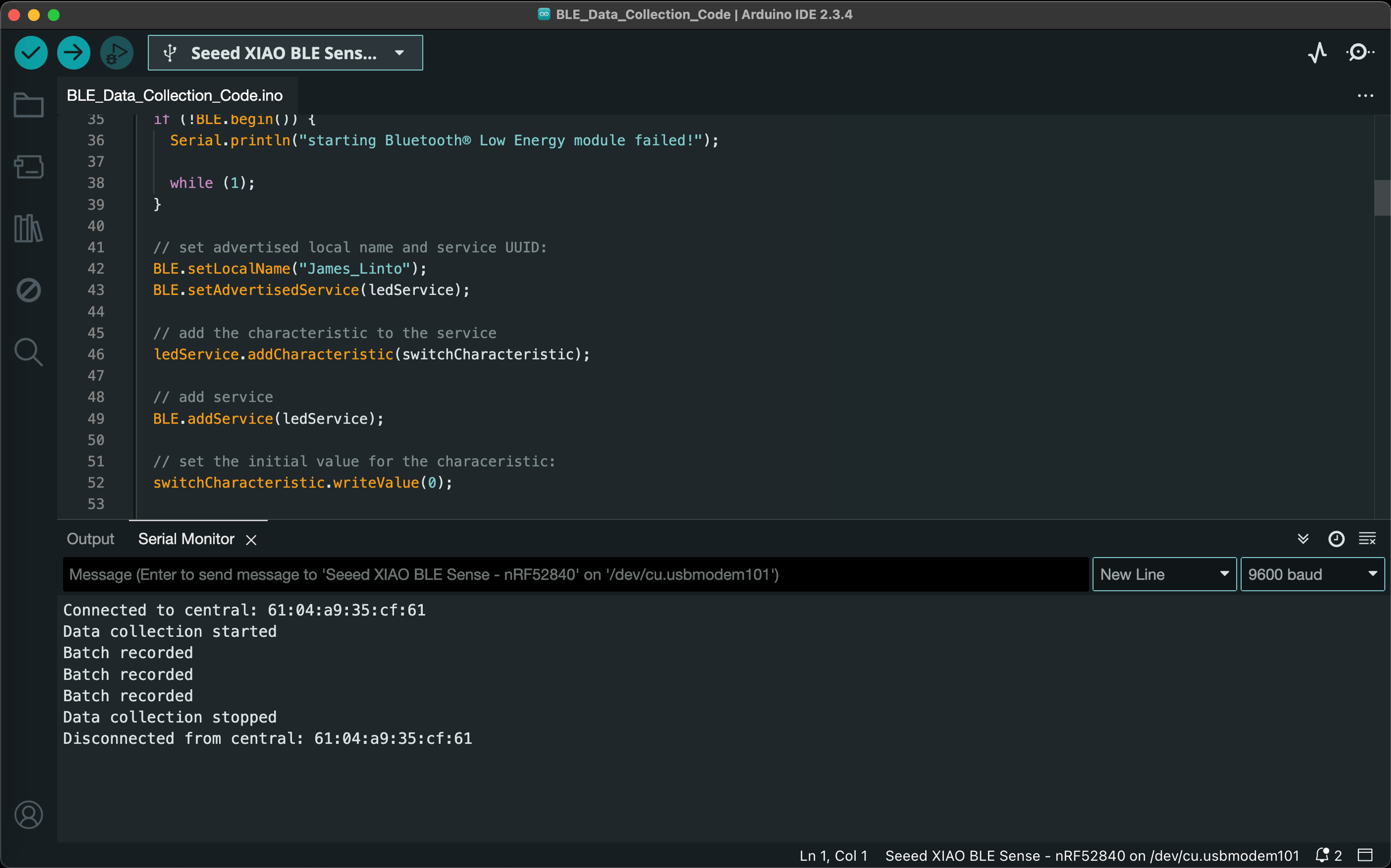

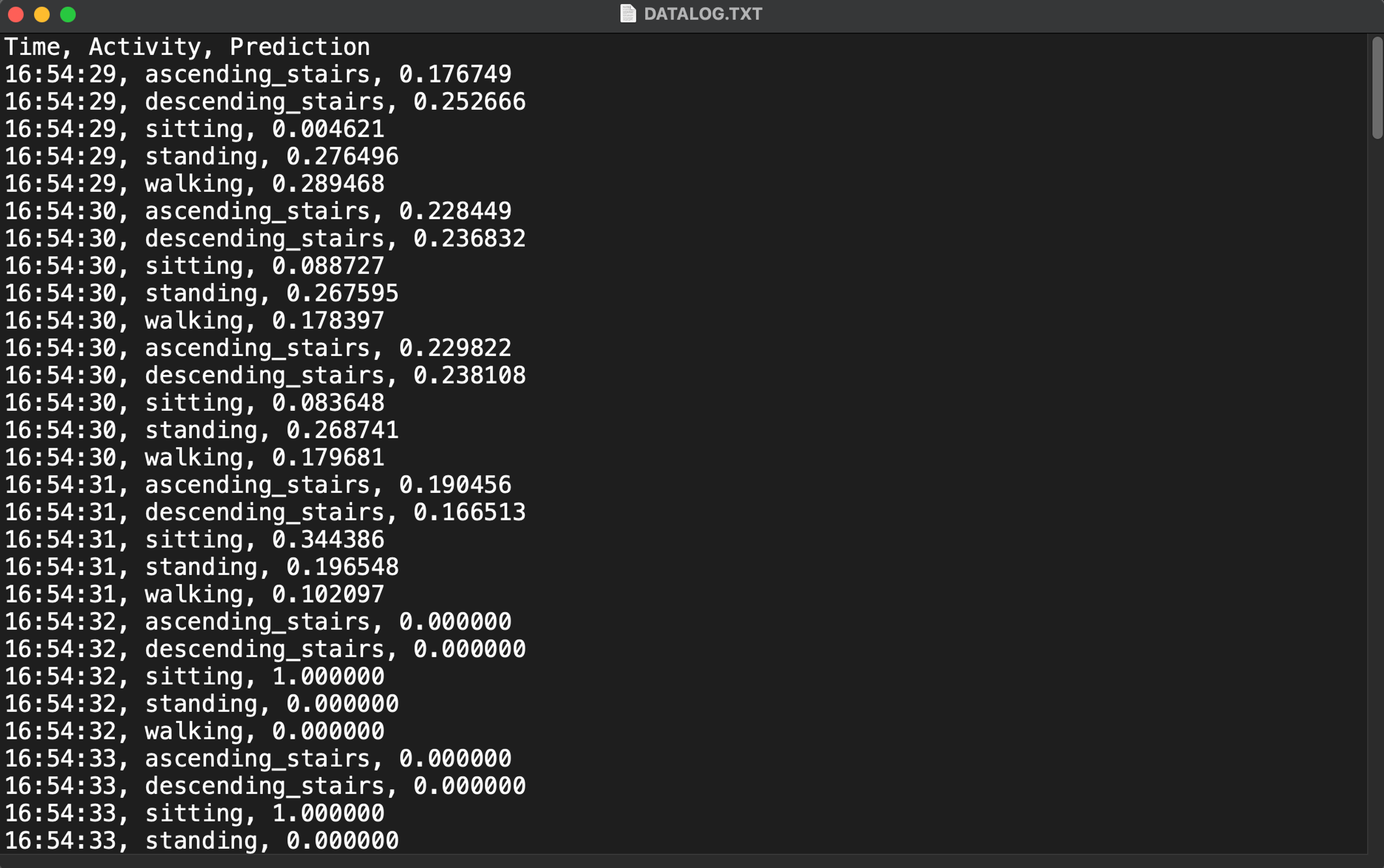

We captured data from both the accelerometer and gyroscope for this lab. Data collection was initiated and halted using a bluetooth connection by sending integer values of either 0 or 1. Timestamped batches were then collected at a sample rate of 100 samples per second and logged to the SD card.

Data preprocessing

Our original dataset was created as a single text file (download here) which needed to be separated into each of the 5 activities. This aspect of the project presented several challenges.

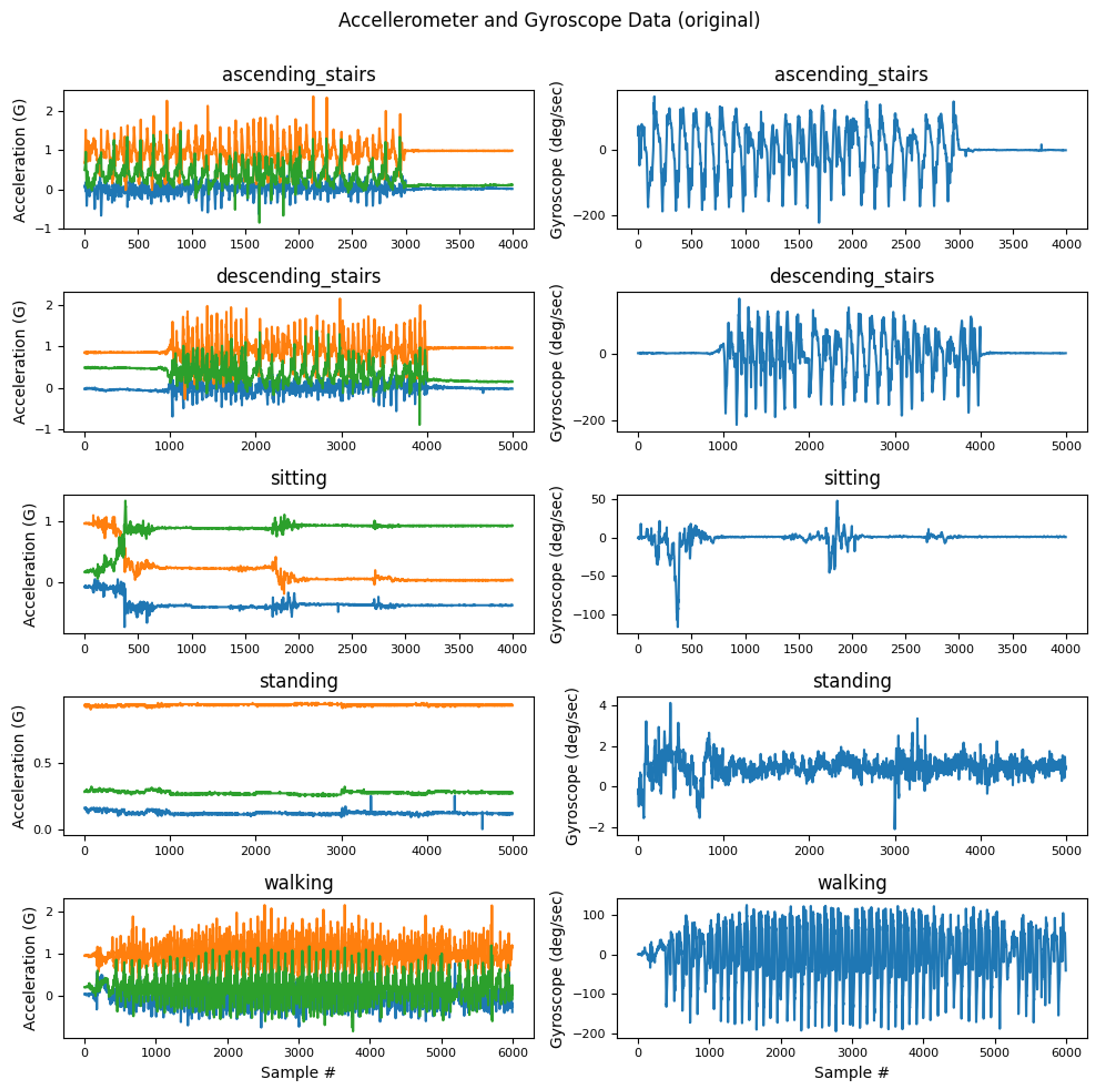

Since we recorded ascending and descending the stairs in one pass, we needed to determine which parts of the data corresponded with ascending and descending activities. Fortunately, we recorded the duration of each pass using a stopwatch and were able to retroactively calculate the approximate number of samples for each ascending and descending cycle. Plots for our accelerometer and gyroscope data before processing are shown below.

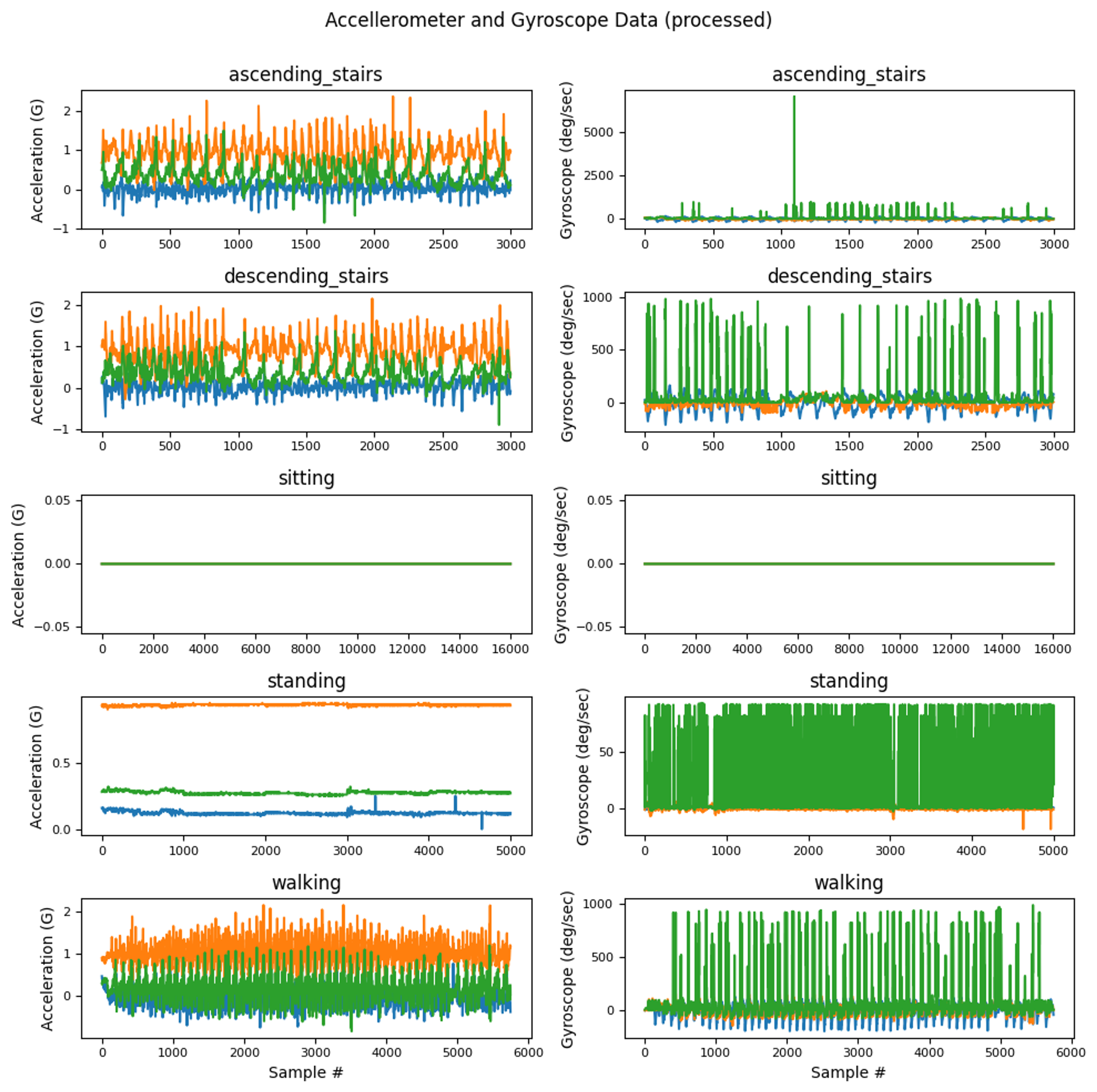

Additionally, our microcontroller did not delimit values for the z axis of the gyroscope. Rather, it concatenated the y and z axis into a single, invalid string that needed to be separated. This task was simple when the z axis value was negative, since we could split the value at the negative (-) character. When the z axis value was positive, we simply split the character string in half and added one half of the data to the gyroscope's z axis column (gZ). It was also necessary to convert null values to zeros and remove outliers before model training.

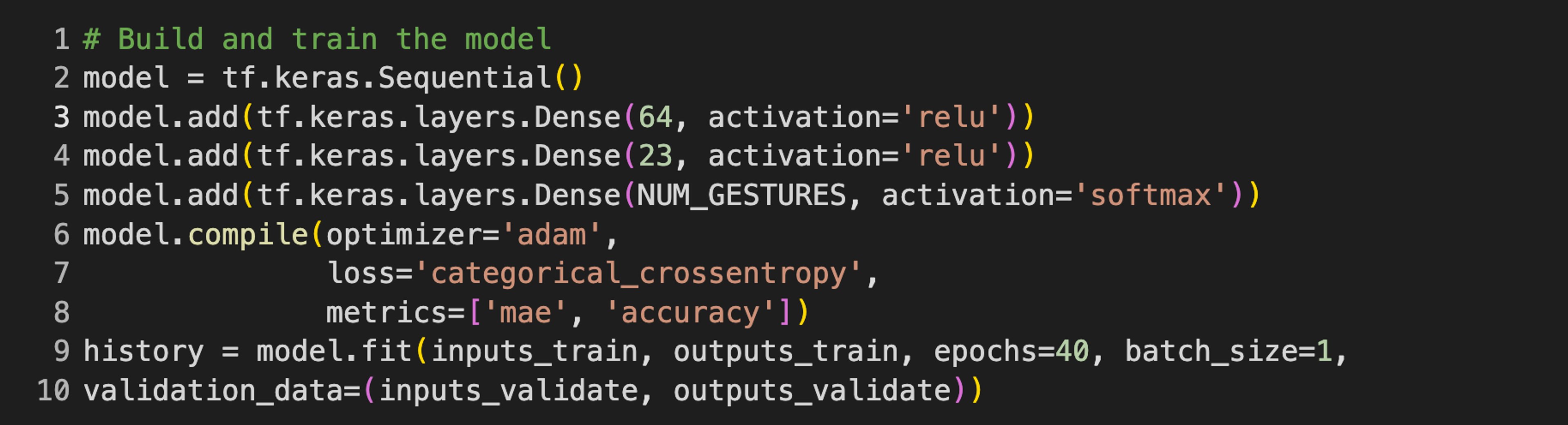

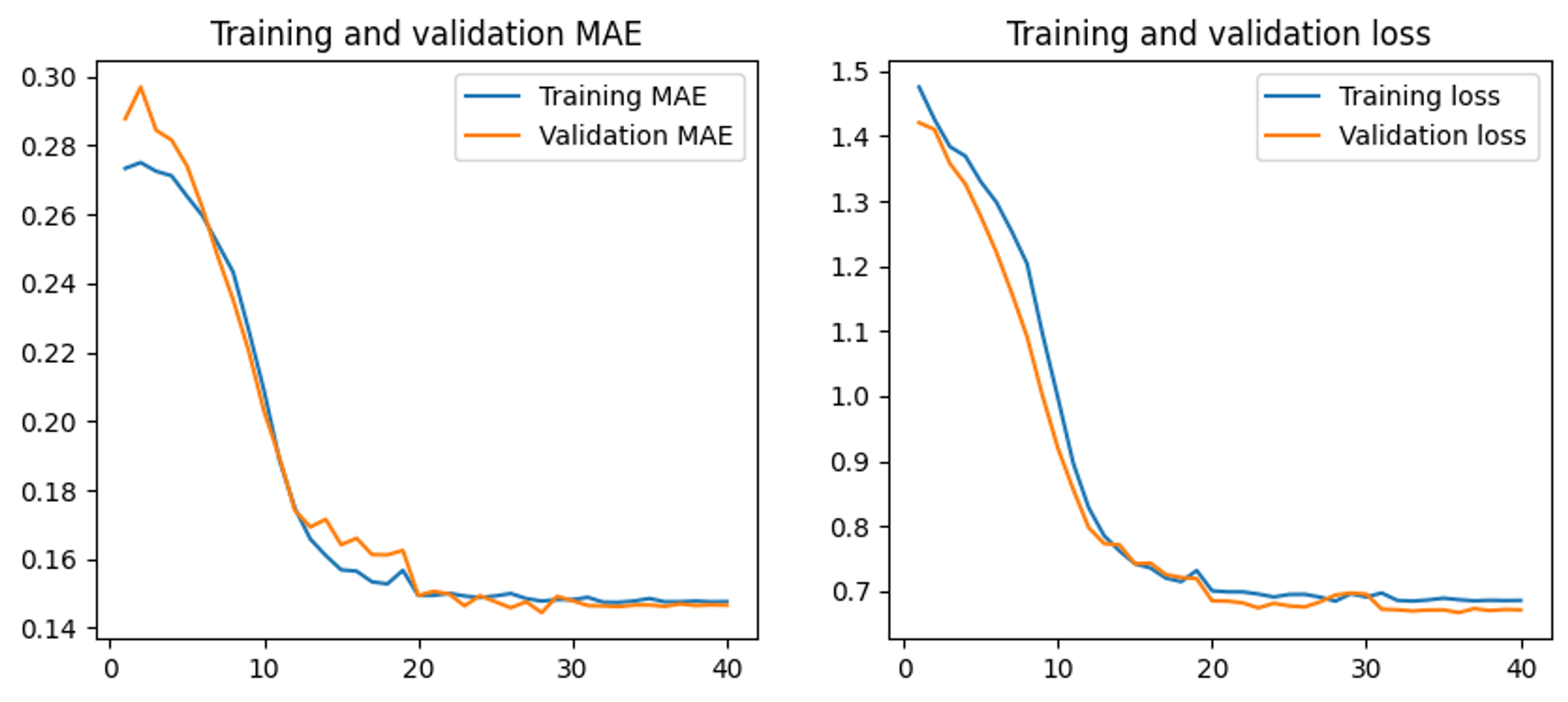

We then trained our model for 40 epochs and achieved a top validation accuracy of ~0.73 using the hyperparameters below. The model performance would likely improve with more precise data capture practices. Finally, we exported the model to TensorFlow Lite and generated a model.h file for our microcontroller. The data processing and model training code we used can be viewed on Google Colab here.

Activity prediction

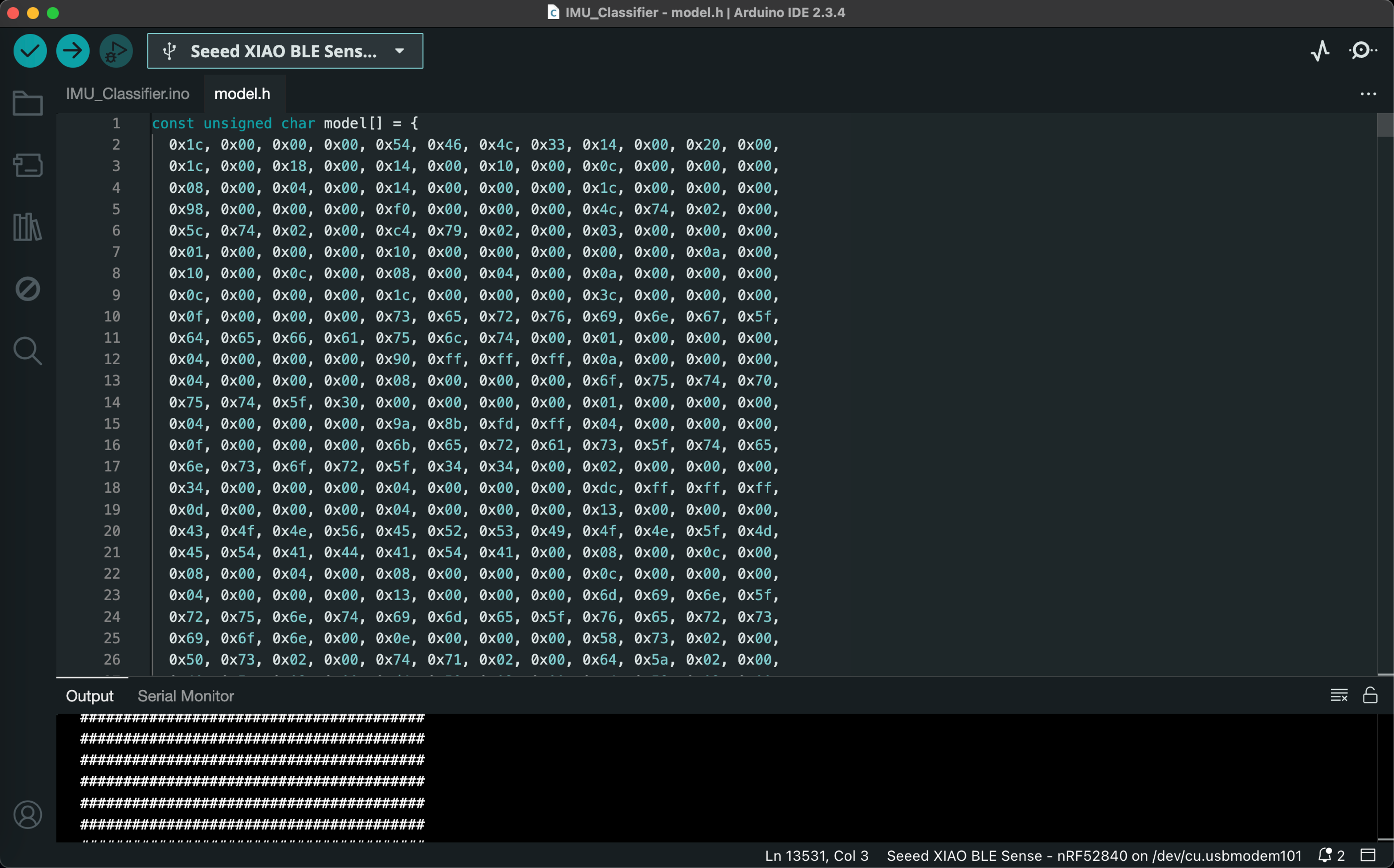

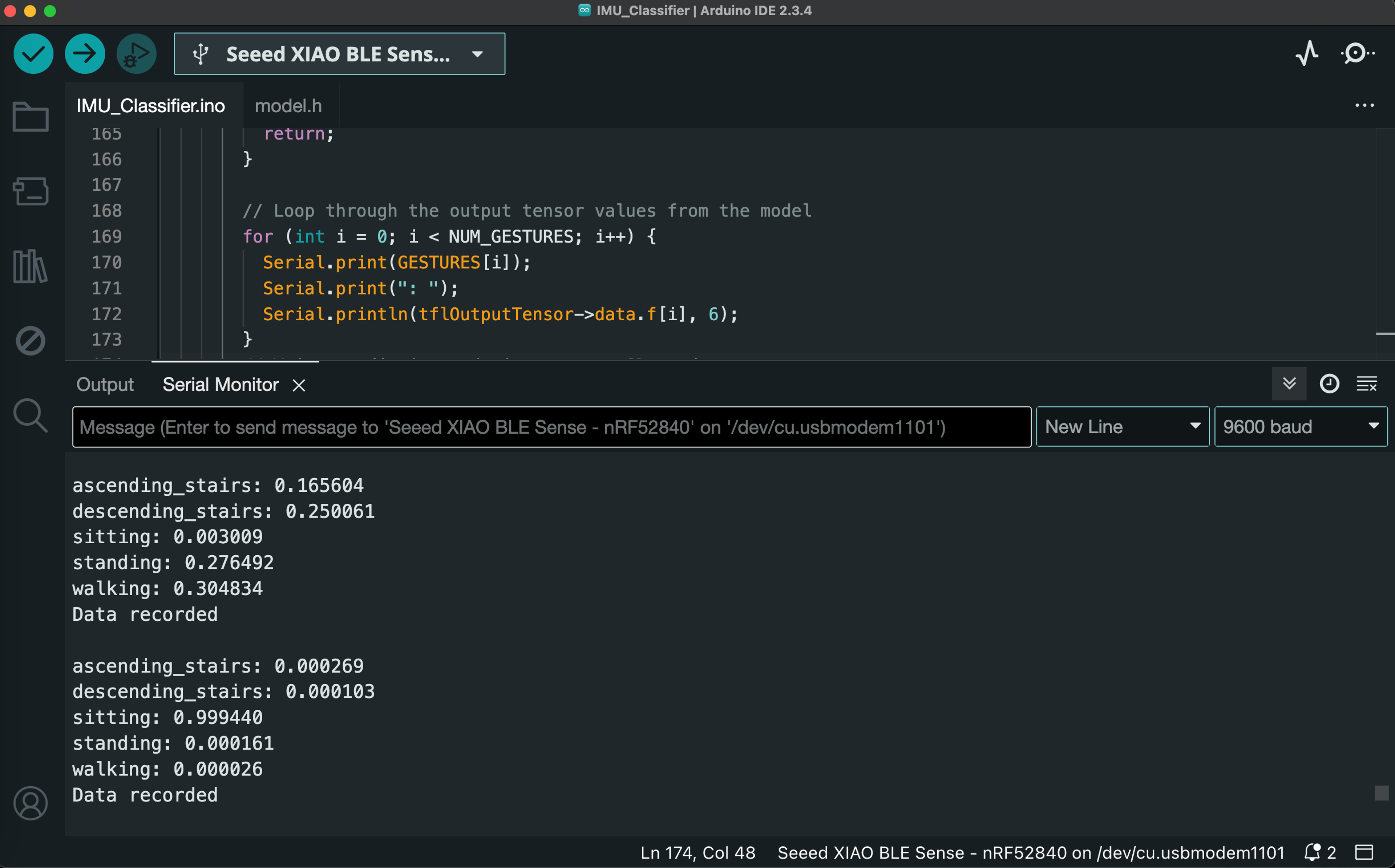

We flashed the microcontoller again, this time with code to predict the activities relative to live sensor data. The model.h file created previously was added to the Arduino code as a single byte array.

Our model tends to over-predict "sitting" as the most likely activity. This might be due to the homogeneous nature of the dataset used during training or some form of class imbalance relative to the number of samples collected for the other activities. Nonetheless, our model generates new predictions when the device is moved, suggesting that it is able to distinguish between multiple activity classes. This could likely be improved with more data and fine-tuning the model.

We noticed our initial data collection code did not update the timestamp for each batch of data collected. This was fixed in the prediction code so the device adds updated timestamps for each batch of predictions.

Demo

Linto made a brief demo video showing the activity tracker in action.

Files

All our files for this lab can be downloaded here.